“Gemini 3 Flash costs $0.50 per million input tokens and $3.00 per million output tokens. Audio input is priced at $1.00 per million tokens. This represents the most cost-effective frontier-level AI model, delivering performance comparable to premium models at significantly lower rates.”

Google’s latest Flash model represents a significant evolution in AI accessibility. The third generation combines frontier-level intelligence with practical economics, making advanced AI capabilities available to more developers and businesses.

This model delivers performance that previously required expensive Pro-tier solutions. At the same time, it maintains the speed and efficiency that made Flash models popular for production applications.

Understanding the cost structure helps teams make informed decisions about integration, scaling, and budget planning. This guide covers everything from basic token rates to advanced optimization strategies.

⚡ Gemini 3 Flash Live Price Tracker

Real-time pricing for Google’s latest Gemini 3 Flash Model

📥 Text Input Pricing

⚡ Gemini 3 Flash Live Price Tracker

📤 Text Output Pricing

⚡ Gemini 3 Flash Live Price Tracker

🎤 Audio Input Pricing

⚡ Gemini 3 Flash Live Price Tracker

🎯 Key Features Latest

⚡ Gemini 3 Flash Live Price Tracker

💰 Cost Calculator

🚀 Why Choose Gemini 3 Flash?

- Frontier Intelligence: Pro-grade reasoning at Flash-level speed and cost

- Exceptional Speed: 3x faster than Gemini 2.5 Pro with superior performance

- Cost Effective: Significantly cheaper than Gemini 3 Pro while maintaining quality

- Massive Context: Handle up to 1 million tokens in a single request

- Multimodal Excellence: Process text, images, and audio seamlessly

- Agent-Ready: Optimized for agentic workflows and tool usage with 78% on SWE-bench

- Production Ready: Default model in Gemini app and rolling out globally

- Token Efficient: Uses 30% fewer tokens than Gemini 2.5 Pro on average

📊 Gemini 3 Flash vs. Other Models

| Model | Input Price | Output Price | Speed |

|---|---|---|---|

| Gemini 3 Flash | $0.50 / 1M | $3.00 / 1M | ⚡⚡⚡ Fastest |

| Gemini 2.5 Flash | $0.30 / 1M | $2.50 / 1M | ⚡⚡ Fast |

| Gemini 3 Pro | ~$2.00 / 1M | ~$12.00 / 1M | ⚡ Standard |

Value Proposition: Gemini 3 Flash delivers frontier-level intelligence at a fraction of the cost of Gemini 3 Pro. While slightly more expensive than 2.5 Flash, it offers significant performance improvements, superior reasoning capabilities, and 3x faster processing speed. The model uses 30% fewer tokens on average, which can offset the higher per-token cost in real-world applications.

💡 Practical Use Cases

- Agentic Coding: Build powerful coding agents with 78% success rate on SWE-bench Verified

- Interactive Apps: Power responsive applications with low-latency real-time processing

- Tool-Heavy Workflows: Handle complex multi-step tasks with reliable function calling

- Content Analysis: Process large documents, videos, and audio files efficiently

- Rapid Prototyping: Iterate quickly on UI/UX designs and app development

- Business Automation: Automate customer feedback analysis, email drafting, and more

📱 Where to Access Gemini 3 Flash

For End Users: Gemini 3 Flash is now the default model in the Gemini app (free) and AI Mode in Google Search, available globally.

For Developers: Access via Gemini API, Google AI Studio, Vertex AI, Gemini Enterprise, Gemini CLI, Android Studio, and Google Antigravity.

Note: The model is free for consumer use in the Gemini app and Search. API and enterprise usage follows the pricing listed above.

Core Cost Structure

The model uses straightforward token-based billing. Input costs $0.50 per million tokens. Output costs $3.00 per million tokens. Audio input has a separate rate of $1.00 per million tokens.

This represents a 67% increase from the previous Flash generation. However, performance improvements justify the cost adjustment. Benchmarks show superior results across coding, reasoning, and multimodal tasks.

Token efficiency also improved. The model uses approximately 30% fewer tokens for typical tasks compared to earlier versions. When combined with lower per-token rates than Pro models, real-world costs remain competitive.

Standard Rate Card:

| Input Type | Cost Per 1M Tokens | Cost Per 1K Tokens |

| Text Input | $0.50 | $0.0005 |

| Text Output | $3.00 | $0.003 |

| Audio Input | $1.00 | $0.001 |

Free Access vs Developer Billing

Consumer access through the Gemini app remains free. Users can interact with the model at no cost for everyday tasks. This includes conversations, content generation, and basic analysis.

Developer access through Google AI Studio offers limited free testing. New users receive free requests within rate limits to evaluate the model. Once you move to production or exceed free tier limits, standard billing applies.

API usage for applications requires a Google Cloud project with billing enabled. There is no monthly subscription. You pay only for actual token consumption.

Rate limits vary by tier. Free tier users face strict limits of 5-15 requests per minute. Paid tier customers receive production-ready limits that scale with usage patterns.

How Developer Billing Works

The billing system tracks every token processed. Input tokens include your prompts, system instructions, and any context you provide. Output tokens include the model’s responses.

Tokens are not equivalent to words. A rough estimate is 4 characters per token in English. Languages with different character sets may have different ratios.

Google provides a countTokens endpoint. This lets you calculate costs before sending requests. The SDK also includes a tokenizer for local estimation.

Example Cost Calculations

Consider a customer service chatbot handling 100,000 conversations monthly. Each conversation averages 500 input tokens and generates 300 output tokens.

Total input: 100,000 conversations × 500 tokens = 50 million tokens

Input cost: 50 million × $0.50 per million = $25.00

Total output: 100,000 conversations × 300 tokens = 30 million tokens

Output cost: 30 million × $3.00 per million = $90.00

Monthly total: $115.00 for 100,000 customer interactions

Evolution Across Versions

The Flash family has progressed through three major releases. Each generation brought specific improvements in capability, speed, and economic efficiency.

Version 2.0: Foundation

The 2.0 Flash model established the Flash line as a high-volume option. It provided basic multimodal understanding and reasonable performance for straightforward tasks.

The context window was limited. Complex reasoning tasks often require upgrading to Pro models. The version served well for classification, basic chat, and simple content generation.

Version 2.5: Refinement

Version 2.5 introduced significant quality improvements. Input costs $0.30 per million tokens. Output costs $2.50 per million tokens.

This version bridged the gap between Flash and Pro tiers. Many tasks that previously required Pro became viable on the more economical Flash option.

Processing speed remained excellent. The model handled real-time applications effectively while delivering improved output quality.

Version 3: Current Generation

The current release surpasses many previous Pro-tier models on key benchmarks. It achieves 78% on SWE-bench Verified and 90.4% on GPQA Diamond.

Context window expanded to 1 million tokens. This matches Pro-tier capabilities for document analysis and extended conversations.

Visual reasoning improved substantially. The model handles complex spatial understanding and detailed image analysis that was previously unreliable in Flash variants.

Generation Comparison:

| Feature | Version 2.0 | Version 2.5 | Version 3.0 |

| Input Cost | $0.08/1M | $0.30/1M | $0.50/1M |

| Output Cost | $0.30/1M | $2.50/1M | $3.00/1M |

| Context Window | Limited | Extended | 1M tokens |

| Best Use Case | Basic chat | Balanced tasks | Complex work |

| Performance | Entry level | Improved | Frontier-level |

Competitive Landscape

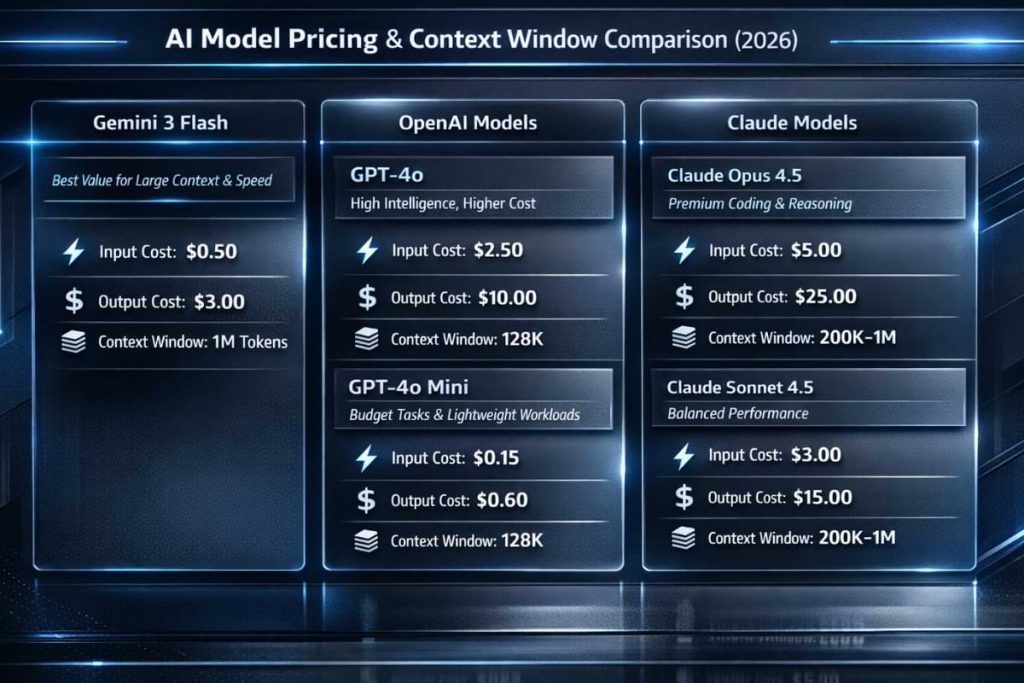

Understanding how Google’s offering compares to alternatives helps with strategic decisions. OpenAI and Anthropic provide strong competition with different strengths.

OpenAI Models

GPT-4o costs $2.50 input and $10.00 output per million tokens. This is 5x more expensive for input and over 3x more for output compared to the Flash model.

GPT-4o Mini offers budget-friendly rates at $0.15 input and $0.60 output. This makes it cheaper than Flash for simple tasks where maximum intelligence is not required.

Context windows differ significantly. GPT-4o supports 128K tokens compared to Flash’s 1M token window. For document-heavy workloads, Flash provides better value through its larger context capacity.

Claude Models

Claude Opus 4.5 represents the premium tier at $5.00 input and $25.00 output. It excels at coding with an 80.9% SWE-bench score but costs 10x more for input than Flash.

Claude Sonnet 4.5 offers balanced performance at $3.00 input and $15.00 output. This is 6x more expensive for input and 5x more for output compared to Flash.

Claude Haiku 4.5 provides the closest competition at $1.00 input and $5.00 output. It costs twice as much as Flash while offering near-frontier performance for speed-critical applications.

Market Position Analysis:

| Model | Input/1M | Output/1M | Context |

| Gemini 3 Flash | $0.50 | $3.00 | 1M tokens |

| GPT-4o | $2.50 | $10.00 | 128K tokens |

| GPT-4o Mini | $0.15 | $0.60 | 128K tokens |

| Claude Opus 4.5 | $5.00 | $25.00 | 200K tokens |

| Claude Sonnet 4.5 | $3.00 | $15.00 | 200K/1M |

| Claude Haiku 4.5 | $1.00 | $5.00 | 200K tokens |

Value Proposition Analysis

The Flash model positions itself in the efficiency tier. It costs significantly less than flagship models while approaching or exceeding their performance on many tasks.

For high-volume applications, the cost differential compounds. A system processing 1 billion tokens monthly would spend $500 on Flash input versus $2,500 on GPT-4 or $3,000 on Claude Sonnet.

The larger context window provides additional value. Tasks requiring extensive context work more efficiently on Flash than on models with smaller windows that require chunking or truncation.

Understanding Token Economics

Tokens represent the fundamental unit of AI model consumption. They are not words or characters but processing units that the model uses internally.

A typical English word equals roughly 1.3 tokens. The phrase ‘artificial intelligence‘ uses approximately 2.5 tokens. Longer technical terms consume more tokens.

Punctuation and spacing affect token count. Multiple spaces or special characters can increase consumption. Well-structured prompts optimize token usage.

Different languages have different token densities. Chinese and Japanese typically use more tokens per word than English. Russian and Arabic fall between these extremes.

Why Flash Models Cost Less

Flash models prioritize efficiency through architectural optimizations. They use smaller parameter counts and faster inference pathways compared to Pro models.

This does not mean lower quality. The third-generation Flash matches or exceeds many Pro models on specific tasks through better training techniques and data quality.

Lower costs reflect reduced computational requirements. Flash processes requests faster while using less infrastructure, allowing Google to charge lower rates.

Typical Monthly Costs for Applications

Small applications with 10,000 user interactions monthly:

- Average 200 input tokens and 150 output tokens per interaction

- Total: 2M input + 1.5M output tokens

- Cost: approximately $5.50 per month

Medium applications with 100,000 interactions monthly:

- Average 500 input tokens and 300 output tokens per interaction

- Total: 50M input + 30M output tokens

- Cost: approximately $115 per month

Large applications with 1 million interactions monthly:

- Average 800 input tokens and 400 output tokens per interaction

- Total: 800M input + 400M output tokens

- Cost: approximately $1,600 per month

Strategic Considerations for Businesses

Startups benefit from the low entry cost. Testing AI features requires minimal upfront investment. Free tier access allows prototype validation before committing to infrastructure.

Scaling costs remain predictable. Linear token-based billing means growth does not trigger tier jumps or rate increases. Budget forecasting becomes straightforward.

SaaS companies find particular value in Flash models. The combination of quality and affordability supports margin-positive AI features without excessive infrastructure costs.

Cost Optimization Strategies

Context caching reduces repeated content costs by 90%. For applications with consistent system prompts or knowledge bases, cache writes cost 1.25x base rate, but cache reads cost only 0.1x.

Batch processing offers 50% discounts. Non-urgent workloads processed asynchronously receive half-price rates, making data analysis and content generation more economical.

Prompt engineering matters. Concise, well-structured prompts reduce token consumption without sacrificing output quality. Each unnecessary word increases costs across all requests.

Model routing creates efficiency. Simple tasks route to cheaper models like Flash-Lite. Complex reasoning escalates to the full Flash model only when necessary.

Real-Time Application Considerations

Latency remains excellent despite the lower cost. Flash processes requests quickly enough for chat interfaces and real-time assistants.

Streaming responses work seamlessly. Users see output as it generates rather than waiting for complete responses. This improves perceived performance.

Rate limits scale with usage tier. Production applications receive sufficient capacity for smooth operation. Free tier limits only affect testing and prototyping.

Common Questions

Does the third generation cost more than version 2.5?

Yes. Input costs increased 67% from $0.30 to $0.50 per million tokens. Output rose 20% from $2.50 to $3.00. However, performance improvements and token efficiency gains offset much of the increase for typical workloads.

Is there truly free access?

Consumer access through the Gemini app costs nothing. Developers receive free testing access through Google AI Studio with rate limits. Production API usage requires billing after the free tier exhaustion.

How much does it cost per 1,000 tokens?

Input costs $0.0005 per thousand tokens. Output costs $0.003 per thousand tokens. Most pricing discussions use per-million rates because individual requests often exceed thousands of tokens.

Which model offers better value for startups?

Flash provides excellent startup value. Lower costs support experimentation and iteration. The model handles most production tasks effectively. Reserve expensive alternatives for specific use cases that truly require maximum capability.

Can batch processing reduce costs significantly?

Batch API provides 50% savings. Input drops to $0.25 per million tokens. Output falls to $1.50 per million. This works well for content generation, data analysis, and other non-urgent workloads.

Does the large context window cost extra?

No. Unlike some competitors, Google does not charge premium rates for the full 1 million token context window. All tokens within the window use standard pricing regardless of position or length.

Which tasks benefit most from this model?

Customer service chatbots, content generation, code assistance, document analysis, and data processing work exceptionally well. Tasks requiring extended reasoning over large contexts particularly benefit from the 1M token window at this price point.

How does context caching work?

Frequently used content can be cached for repeated requests. The first request pays 1.25x standard rates to write the cache. Subsequent requests using cached content pay only 0.1x rates for those tokens, achieving 90% savings on repeated content.

What monitoring tools help control expenses?

Google Cloud billing dashboards track usage in real time. Set spending limits and alerts to prevent unexpected charges. The countTokens API helps estimate costs before sending requests. Most applications also implement their own tracking for granular visibility.

Is Gemini 3 Flash cheaper than GPT-4?

Yes. Gemini 3 Flash is 5x cheaper for input ($0.50 vs $2.50) and over 3x cheaper for output ($3.00 vs $10.00) compared to GPT-4o. It also offers a larger context window (1M vs 128K tokens), making it more economical for document-heavy workloads.

Making the Decision

The third-generation Flash model occupies a unique market position. It delivers frontier-level intelligence at mid-tier costs, challenging previous assumptions about the price-performance tradeoff.

For most production applications, this model provides sufficient capability at sustainable costs. The combination of quality, speed, and affordability makes it suitable for diverse use cases.

Consider alternatives when specific requirements emerge. Maximum coding capability might justify Claude Opus. Extreme budget constraints might favor GPT-4o Mini. But for balanced needs, Flash offers compelling value.

Testing remains free through Google AI Studio. Prototype your application to verify the model meets your requirements before committing to production deployment.

The pricing structure aligns with modern application economics. Token-based billing creates predictability. Optimization features like caching and batching provide control over expenses.

As AI integration becomes standard rather than experimental, cost-effective solutions gain importance. Flash models demonstrate that advanced capabilities need not require enterprise budgets.